marija top right howmuch data swirls around you- howmuch structured? did not follow moderator invitation to q&a puff her own role/bureucracy

marija top right howmuch data swirls around you- howmuch structured? did not follow moderator invitation to q&a puff her own role/bureucracybout our work https://www.end-violence.org/safe-online

On what Big tech is proposing to do better, see Project Protect announcement, with implementation being prepared: https://www.technologycoalition.org/2020/05/28/a-plan-to-combat-online-child-sexual-abuse/

And see the Voluntary Principles - the key is for everyone including governments and civil society to hold tech accountable to these promises - actions must follow: https://www.justice.gov/opa/press-release/file/1256061/download

From Marija Manojlovic to Me, All Panelists: 10:30 AM

It is the End Violence Global Partnership/ Safe Online - here you can read more about our work https://www.end-violence.org/safe-online

From Me to Everyone: 10:31 AM

thks

From Danielle Alice Desanges Aucéane THIAM de GOGUENHEIM to Everyone: 10:31 AM

Thank you very much John.

From Marija Manojlovic to Everyone: 10:31 AM

Thank you everyone for joining!

From Noelli Amancio to Everyone: 10:31 AM

Thank you very much!

From Dushica Naumovska to Everyone: 10:31 AM

Thank you for a great and informative session! Dushica Naumovska, INHOPE

From Glenn McKnight to Everyone: 10:32 AM

All the best important issue

From Webinar Attendee to Everyone: 10:32 AM

Thank you for informative session indeed

From Danielle Alice Desanges Aucéane THIAM de GOGUENHEIM to Everyone: 10:32 AM

Thank to all the keynote speakers. We need to stand up and keep our children safe: they are the future of the world

From John Tanagho to Everyone: 10:32 AM

Thank you everyone! You are part of the solution! Let's do this together! https://osec.ijm.org/news/

x ai prevent child abuse world leadership king/queen sweden- previous keynote worldchildhood

john tanagho - ai slides -dir intl jusice mission to end kid exploitation dev world

-example ijm partemerd philipines gov

uk ecxaple safeto net

yalda bracket foundation

anecdote - we see security camera cctv can recginse car accident and alerts first responders -edu siftware to recogbise avcident

example ai and achine learning-

we could could do this - ai could recogibes anythingon net which is streaming buse of kids

agian safetonet - soft can alert parents if child user is experiencing distress

==================

iraki overall educator

uea has minster of ai!

over to abdulrahman

recall uae main 4 yearly platfor itu

very dgood abdul

online huge dta chalenge

3 area to consider ai-

1 calasfy new mateial

verify its exploitatio

identify perpetrtors- hash them

paet 2

dsrupt criminal activity

3 data children themselves generate

q what partbes doyou nee john - law agencies - train ai tool on datasets - only law can own the training data- need tech wizardparters porvate- two hat secutitypsrtner royal canadisan police - bultilt too ceaseai -diverse culturala -interpol ..

neiher sidean do on own

Neil Fairbrother 09:13 AM

The EU’s proposed temporary derogation to the ePrivacy regulations makes the use of innovative new technolgies illegal. This will impact all new products and services, AI-based or otherwise, that are designed to find and eliminate CSAM material, across the EU and in the UK, assuming the regulations are passed before the end of the transition period. What can AI development companies do to ensure this new regulation is ammended to allow for innovation before it is passed?

Webinar Attendee 09:15 AM

is there any certification for this AI

Chat and Q&A 09:19 AM

Hello, certificates are not awarded for the webinar(s)

Webinar Attendee 09:18 AM

how do we align and allow developing countries to actually achieve 'must do' attitude when they dont have basics in place?

ALEGRIA KRIZIA JANE TOLEDO 09:28 AM

Can we have an access for the recording of this meeting?

Chat and Q&A 09:29 AM

Hello, yes the recording will be available on our website https://aiforgood.itu.int/events/keeping-our-children-safe-with-ai/

ALEGRIA KRIZIA JANE TOLEDO 09:33 AM

Thank you for this!

Dr Grace Thomson 09:38 AM

(I have a question- For the training of the AI, would stock of such similar photos have to be used? How do you regulate this? What is the source of this?)

John Tanagho 09:45 AM

Good question. The AI would be trained in collaboration with law enforcement because the tool needs to be trained on real CSAM photos and videos, and only law enforcement can lawfully possess those. Two Hat Security did this with it's CEASE.ai tool in partnership with the Royal Canadian Mounted Police (RCMP). So it takes a private-public partnership.

Ana Rodriguez 09:39 AM

Question to John Tanagho, how could you stop the creation of CSAM from the device? To do so wouldn't you have to be monitoring all material and then determine which is CSAM to "block" it? Wouldn't that be privacy invasive for users that are only using their phone and not creating CSAM?

John Tanagho 09:41 AM

Hi Ana - great question. The AI tool would only recognize and block CSAM. So it would not "see" any other photos or videos someone creates on their phone. Because the AI is trained on datasets of CSAM, it will only detect that content and block it. It won't view or screen all your photos videos and livestreams.

Neil Fairbrother 09:48 AM

PhotoDNA is excellent at what it does, but its weakness is that it relies on the fact that the abuse has happened - it’s a retrospective analysis of content, the abuse has already happened.

Varun VM 09:00 AM

Since the pandemic, the reliance on AI based technologies has increased, especially in the education sector. The classes are delivered online and children are using mobiles, laptops etc for for education. This makes them more vulnerable to various activites such as exposure to adult content, interactions with anti-socials etc. How can we prevent this using AI? Precisely, how can AI be used to prevent cyber crime against children?

You 09:20 AM

do you know of any country that has a 21st c minister of children - differentiated from minister of education- it seems you are raising issus on how all media is designed through communities not just the admin of schools ; indeed while real schools have been replaced by media this seems to be an opportunity to clarify sdgs need a minister of child

Chaim Shine 09:23 AM

we are giving now mobile phones to village children in India.what would be the best routine for us to teach them how to use the internet safety.you mentioned so many organizations..which developed the best program for us to use and how to do it, is there a recommended routine.

Marija Manojlovic 09:44 AM

there are some really interesting programs in India working on this issues you can partner with and learn from - http://aarambhindia.org/ is a really good group. I would also check on the local reporitng and vicitm support organizations and get in touch with them so you can provide local support and resrouces to children, teachers and caregivers.

Chaim Shine 09:23 AM

practical

Neil Fairbrother 09:26 AM

Can AI be used to identify in real time the production of CSAM in encrypted live stream PPV & Direct CSA channels and then disrupt what the camera is seeing, so this material can’t be produced in the first place?

Sharon Pursey 09:29 AM

SafeToNet is an AI company working to detect and filter harmful content like CSAM. Can we discuss how we can train AI (like ours) given that it is illegal - how can we quickly collaborate to use this data to innovate? This is a big barrier.

Neil Fairbrother 09:32 AM

Grooming by definition makes people feel good. Can Ai be used to parse out grooming conversations on a smartphone from normal conversations? What factors about a grooming conversation should be looked for in a real time AI engine?

Marija Manojlovic 09:49 AM

There are various clues being used - including the age difference and langugage patterns used by children as opposed to adults, the time between the first contact and the request for an image/nude… for now, the tools that are being used are largely used for evidencing on historical chats and not in real time, that is one of the big gaps…

Neil Fairbrother 09:50 AM

Thanks Marija - I think to be truly effective the disruption needs to be in real time.

Marija Manojlovic 09:52 AM

Agreed! This is particularly difficult and becoming even more so -due to E2EE chat apps… but it would certianly be a game changer… we are investing in some promising tools though our Safe Online work - https://www.end-violence.org/safe-online

Chaim Shine 09:36 AM

why arent we not talking to the mobile phone mannufacturers to put ai etectors on the phones they sell...

John Tanagho would like to answer this question live.

Sharon Pursey 09:49 AM

We are talking to Samsung UK and Ireland about embedding our AI from SafeToNet. Mobile phones should be “safe out of the box”

Webinar Attendee 09:41 AM

What happens when global tech companies make changes in response to organized citizenship advocating for certain rights, and by doing so negatively impact the most vulnerable?

How could hierarchies for good be established to ensure safety and protection of children as a priority and not an afterthought? ie Facebook's plan for end-to-end encryption of Messaging service towards citizen privacy will impede law enforcement's tracking online abuse of children, in huge spike since pandemic as stated here. NOTE: 14 million out of 18.4 million reports of CSEM last year came from Facebook platform (the New York Times).

Ana Rodriguez 09:53 AM

Thanks! That is great news, if you have any resources about this topic I would be grateful if you could share them

Neil Fairbrother 09:57 AM

Does Section230 and equivalent legislations in other countries need to be at least ammended so that social media companies have a legal obligation to proactively search for CSAM?

abdul- being swamped by big tech close account and report not as useful as suspend and share with law enforecemt- us companies dont mine the data just ciculate at raw level

14=3 million dollar competition

announce 14 oct 2020marija moanojovic

From Neil Fairbrother to Everyone: 09:01 AM

Neil Fairbrother SafeToNet Foundation Londin

Thank you for joining today’s AI for Good webinar! Today’s session is on “Keeping our Children Safe with AI”, a partner session with the United Nations Interregional Crime and Justice Research Institute (UNICRI). Please feel free to use the chat function to message "all panelists" or "all panelists and attendees".

From Anthony Reidy to Everyone: 09:01 AM

Dublin, Ireland

From Bastiaan Quast (ITU) to Everyone: 09:01 AM

Joining from Geneva, Switzerland

From Erica Vidal to Everyone: 09:01 AM

Argentina

From Neil Fairbrother to Everyone: 09:01 AM

Neil Fairbrother SafeToNet Foundation Londin

From Helen Meng to Everyone: 09:01 AM

Hong Kong

From Deepal Tewari to Everyone: 09:01 AM

Deepak Tewari- Lausanne

From Abdulrahman Ahmed Altamimi to Everyone: 09:01 AM

UAE

From Me to All Panelists: 09:01 AM

wash dc

From Dushica Naumovska to Everyone: 09:01 AM

Amsterdam - Dushica from INHOPE

From Mellanie Olano to Everyone: 09:01 AM

philippines IJM

From Michael Jung to Everyone: 09:01 AM

Phnom Penh, Cambodia

From John Tanagho to Everyone: 09:01 AM

Philippines

From Wendy O'Brien to Everyone: 09:01 AM

Vienna

From Alpha Bah to Everyone: 09:01 AM

Bangladesh

From Kiran Heer to Everyone: 09:01 AM

England, UK

From Rey Bicol to Everyone: 09:01 AM

Manila, Philippines

From Hanna-Leena Laitinen to Everyone: 09:02 AM

Helsinki,Finland

From COLLIN DIMAKATSO MASHILE to Everyone: 09:03 AM

Pretoria, South Africa

From Me to Everyone: 09:04 AM

chris.macrae@yahoo.co.uk near nih Bethesda 6 miles from white house! www.economistun.com

From Chat and Q&A to Everyone: 09:10 AM

We are excited to welcome Joanna Rubenstein (President & CEO, World Childhood Foundation USA) today!

Thank you to Irakli Beridze (Head of the Centre for Artificial Intelligence and Robotics at UNICRI) for facilitating this session.

From Me to Everyone: 09:11 AM

do you have a bookmark bio to Rubenstein and worldchildhood - its depth/origin in the multiple livesmatter communitied usa

From Danielle Alice Desanges Aucéane THIAM de GOGUENHEIM to Everyone: 09:14 AM

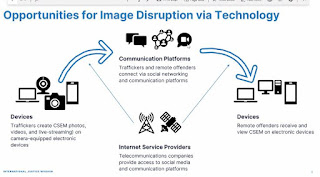

AI, has started to play a prominent part in child sexual abuse investigations, by helping to recognize, categorize and triage material. This technology is currently mostly used by law enforcement, however industry is now developing AI to recognize online child sexual abuse material.

From Danielle Alice Desanges Aucéane THIAM de GOGUENHEIM to Everyone: 09:21 AM

Thank you Joanna.

From Chat and Q&A to Everyone: 09:25 AM

We are excited to welcome John Tanagho (Director of International Justice Mission’s END OSEC Center), Yalda Aoukar (President, Bracket Foundation), Abdulrahman Ahmed Altamimi (Director of the Child Protection Center, Ministry of Interior United Arab Emirates (UAE)), and Marija Manojlovic (Safe Online Lead, Global Partnership to End Violence Against Children)!

From John Tanagho to Everyone: 09:28 AM

Great points Marija! COVID-19 pandemic has increased the risk of online sexual exploitation of children. Attendees wanting to learn more can find IJM's brief online: https://osec.ijm.org/news/wp-content/uploads/2020/06/2020-09-IJM-COVID-19-OSEC-Brief_-9.5.2020.pdf

From Danielle Alice Desanges Aucéane THIAM de GOGUENHEIM to Everyone: 09:33 AM

The scope of what AI can assist with in child sexual abuse investigations is huge. In the near future the hope is that AI will be able to analyze wider portions of data, images, ID, voice recognition...

From Dushica Naumovska to Everyone: 09:34 AM

Thank you Marija for mentioning the European Privacy Directive. We all should write to our MPs and make them aware of the need for tech companies to scan their platforms to identify known CSAM.

From Danielle Alice Desanges Aucéane THIAM de GOGUENHEIM to Everyone: 09:38 AM

Completely agree John and thanks.

another challenge is perfecting things like face recognition. The algorithm reads mature faces better than young ones, which in the scope of child sexual offence and Victim ID needs to change.

From John Tanagho to Everyone: 09:39 AM

Hello Sharon Pursey of SafeToNet!

From Glenn McKnight to Everyone: 09:59 AM

The GSMA has course on Mobile and Child Abuse

https://www.gsmatraining.com/course/children-and-mobile-technology/

We have incorporated into our free online Internet Governance course a discussion of this topic

www.virtualsig.org

From John Tanagho to Everyone: 09:59 AM

Great points, Yalda! Big tech must do more. IJM's OSEC study recommends that tech invest in improving proactive detection and robust reporting of these crimes. https://www.ijm.org/documents/studies/Final_OSEC-Public-Summary_05_20_2020.pdf

From Dushica Naumovska to Everyone: 10:00 AM

We at INHOPE use AI to process CSAM reports submitted to internet hotlines and make sure they are removed from the internet https://www.inhope.org/EN

From Phillippa Biggs to Everyone: 10:01 AM

Thank you Yalda, for a very helpful run-through of some of the major company initiatives, super-helpful!

From Dushica Naumovska to Everyone: 10:02 AM

The Aviator project is using AI to help law enforcement process reports that they receive from NCMEC - they have received from tech companies. - http://aviator.isfp.eu/

From John Tanagho to Everyone: 10:05 AM

On what Big tech is proposing to do better, see Project Protect announcement, with implementation being prepared: https://www.technologycoalition.org/2020/05/28/a-plan-to-combat-online-child-sexual-abuse/

And see the Voluntary Principles - the key is for everyone including governments and civil society to hold tech accountable to these promises - actions must follow: https://www.justice.gov/opa/press-release/file/1256061/download

From Hanna-Leena Laitinen to Everyone: 10:06 AM

Hi all,thank you for the insights,really important discussion.We the Protect Children are part of Project Arachnid alliance using AI which I recommend all to check out: https://projectarachnid.ca/en/

qa

how help devcountries- john - yesits a glpbal crome - offendedrs inyernationally organised so need intl law enforcement collab- sharing referrals- been effective in philippines - 64% cases due to foreign referral

local law needs to build capacity

for making a better tomorrow” Episode 1 – Spatiotemporal Algal Bloom Prediction using Deep Learning

We aim to build real-time algal bloom control system to help decision making on early algae suppression using ML-based prediction

– Topic 1: Extreme data imbalance

Input datasets are inherently very skewed since algal blooms are rarely observed.

How can we train ML models from extremely imbalanced water quality data?

– Topic 2: Broad target data

Target area is too broad to collect all datasets.

How can we estimate chlorophyll-a concentration for all target areas?

No comments:

Post a Comment